I dare you?

The Future of Generative AI in Education

MIT Open Learning | December 18, 2023

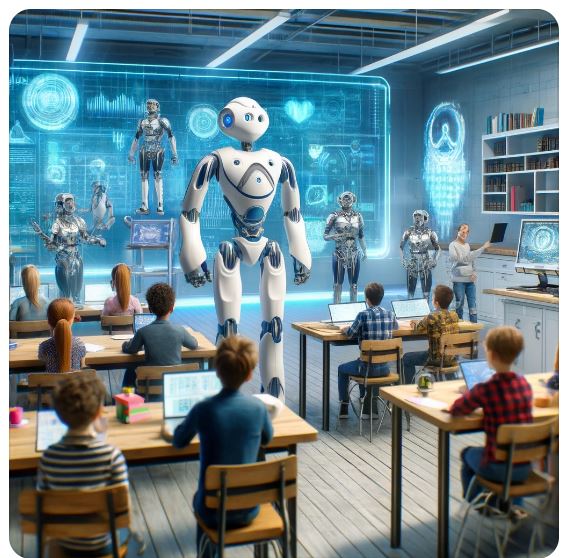

Imagine a world where powerful technology changes the way we learn every day. That's exactly what generative AI is doing to the education landscape right now. During a symposium hosted by MIT Open Learning, educators gathered to discuss how this technology can positively shape the future of learning. They agreed on one thing: educators must first understand what they want for students before using AI as a tool. Let's explore what they learned together.

Generative AI's Impact on Education

Students are already using tools like ChatGPT for homework help. While this can be helpful, educators worry that it might prevent students from developing their skills, especially in writing. If an AI writes an entire essay for you, how will you learn the craft of writing? However, experts at the symposium also saw AI as an opportunity to reshape learning. If educators clearly identify what they want their students to learn, they can adjust their lessons to include activities that require higher thinking skills. AI can help students think creatively and understand the deeper aspects of their work.

Beyond technical skills, Mitch Resnick, an expert at MIT, believes that students should be encouraged to think about the processes behind what they create. His team created Scratch, a programming language that millions of children and adults use worldwide. He emphasizes that learning to think creatively and strategically is just as important as learning the mechanics of coding.

Rethinking Traditional Education

Today's rapidly changing world requires students to adapt and think critically. Educators are now prioritizing teaching strategies that help students become adaptable, creative, and curious thinkers. Instead of simply memorizing information, students should engage in hands-on projects that require collaboration and problem-solving. Educators can use generative AI to help develop these high-level skills in students.

Janet Rankin, director of the MIT Teaching + Learning Lab, said that educators need to be clear about their goals for students before figuring out how AI can help. While past technologies like calculators and the internet had mixed results in schools, understanding what we want students to achieve is key to integrating AI effectively.

Shaping a New Model for Learning

In many schools, the traditional method where teachers lecture and students listen is still dominant. But many researchers believe a more hands-on, project-based approach, where students learn by doing, is more effective. The challenge now is figuring out how AI can support this approach. Educators like Justin Reich, director of the MIT Teaching Systems Lab, emphasize that understanding how schools and students operate is crucial for designing useful AI tools.

Pattie Maes, a professor at MIT Media Lab, dreams of a device that could always be with students, offering personalized advice. This "mentor" device would provide useful suggestions and provoke deeper thinking based on what the student experiences. By constantly understanding their context, it would encourage students to see the world differently and explore their ideas in greater depth.

Overall, generative AI has already made its mark on education, and it's up to educators to guide its future in a way that best helps students learn and grow.

Short comings of the discourse at MIT and what was not foucussed on

Overemphasis on Technology: There's a tendency to focus on the potential of AI without fully considering the practical challenges it brings. Some discussions highlight AI as the answer to numerous educational problems without acknowledging how it might amplify existing issues, like unequal access to technology or varying levels of digital literacy.

Loss of Essential Skills: By using AI for tasks like essay writing, students might miss out on essential skills such as critical thinking, writing mechanics, and independent problem-solving. Relying too much on AI-generated content may hinder students' development in these core areas.

Equity and Access: The integration of AI in education assumes that all students have equal access to these tools. However, digital divides exist, and not all students have the resources or infrastructure to benefit from AI-driven learning.

Ethical Concerns and Bias: Generative AI systems can perpetuate biases present in the data they are trained on. If these tools influence how students learn, they may reinforce stereotypes or present a limited perspective, particularly in content generation.

Teacher Dependency and Trust: Educators may become overly reliant on AI tools, leading to a lack of personal engagement with their students. This could diminish the critical teacher-student relationship and foster an overreliance on technology for decision-making.

Oversimplification of Learning Outcomes: The focus on how AI can streamline education may oversimplify learning outcomes. Education is not just about acquiring information; it's about developing character, social skills, and creativity, which AI might struggle to measure or enhance accurately.

Privacy and Data Security: AI in education relies on collecting large amounts of data about students, which raises concerns about data privacy and security. Mismanagement could lead to data leaks or unauthorized use of personal information.

The UnEthical stand of Pattie Maes to propose a digital device Mentor

Pattie Maes, a professor at MIT Media Lab, envisions a future where a digital mentor device could accompany students everywhere, providing personalized advice and encouraging them to think deeply. While such a tool could revolutionize how we approach learning, it also raises significant concerns around privacy and health. Here's a look into the potential issues that could arise from an ever-present device meant to guide students.

Privacy Invasion

To be effective, the proposed device would need to gather a considerable amount of personal data about the student's behavior, location, and daily interactions. This is where the risk to student privacy emerges. Such a device might be designed to monitor students to deliver highly personalized advice and insight, but that monitoring means collecting sensitive information around the clock.

Data collection on this scale could expose students to surveillance-like scrutiny, making them vulnerable to unintended consequences. Who controls this data, and how securely is it stored? The presence of detailed data about students’ academic performance, social interactions, and personal preferences could lead to concerns about unauthorized access or misuse, particularly if the device or its data is hacked. Moreover, if private companies gain access to this information, they could manipulate the students' behavior through targeted marketing, profiling, or bias reinforcement.

In educational settings, this level of surveillance could also impact students' willingness to engage honestly in the learning process. Knowing that their every action is being monitored, students might suppress creativity or avoid mistakes to ensure their data profile remains spotless, ultimately hindering genuine learning and personal growth.

Health Consequences

Beyond privacy concerns, the health implications of an always-on digital companion are also noteworthy. Extended exposure to digital screens can lead to eye strain, headaches, and poor posture. This could become an issue if students rely too heavily on their devices for learning and personal interaction.

Moreover, being constantly connected to a mentor device might lead to increased stress and anxiety. If the device continuously prompts students to achieve more, it may put undue pressure on them to perform. This could aggravate existing mental health issues or create new anxieties about keeping up with educational expectations.

The dependency on such a device might also affect students' ability to interact with their peers or seek guidance from teachers and mentors. By leaning too much on a digital coach, students might lose valuable social skills and the ability to work independently, leading to issues with real-world interpersonal relationships.

Conclusion: An Ever-Present Mentor Device Should Not Be Allowed

While the concept of a device that can accompany students everywhere and offer personalized advice may appear promising, it poses significant risks to student privacy, mental health, and independence. Such a device would demand constant surveillance, collecting an unprecedented amount of sensitive personal data, which could be susceptible to misuse, hacking, or commercialization. This intrusion could stifle creativity and genuine learning, forcing students to focus more on avoiding mistakes than exploring their full potential.

Additionally, continuous reliance on this digital companion could have severe health implications. Extended screen time might lead to physical ailments like eye strain and poor posture, while relentless prompts to improve could exacerbate mental health issues. It would also create dependence on technology over developing critical social skills and the ability to work independently.

For these reasons, the risks of an ever-present educational device outweigh the potential benefits. Such a device should not be allowed, as it could jeopardize students' privacy, well-being, and personal growth. Instead, we should seek alternative, balanced approaches that support education without compromising student safety or independence.